|

Title: REMS

Authors: Clare Tagg (Tagg Oram Partnership, www.taggoram.co.uk), Somia Nasim and Peter Goff (The Qualifications & Curriculum Development Agency, www.qcda.org.uk)

Analysis Processes

While the management of evidence is a key component of REMS, making use of that evidence, in an effective and efficient manner, is also crucial. It is in analysis that the naming convention, classification and coding of the evidence is so vital and the reason why we were so thorough when setting these up.

The system became operational in October 2008, and at this point the first quarterly analysis was produced for stakeholders. At this point some problems were encountered and key questions were raised, these were:

- How do we analyse the evidence in a productive manner?

- What reporting is going to be useful for the stakeholders?

- How do we account for gaps in the evidence?

- How do we handle the high volumes of evidence?

In order to make the analysis and reporting constructive, the team decided to report in a consistent way - via strand sign-off, thematic and quarterly analysis and reporting. We devised a standard reporting template for the various analyses reporting. To ensure buy-in and validation of the evidence in the system, strand feedback and sign-off reports were produced for the relevant strand manager. This gave the strand manager the opportunity to comment on the data in the system and any resulting emerging themes. This helped us to ensure that all systems processes were working and the right content and conclusions were reported.

Colleagues and stakeholders key requirements of the system were to ensure the evidence was robust, analysis comprehensive and technical jargon was avoided. This was one of the key challenges: the system had so much valuable evidence but we needed to ensure the right evidence was selected to answer the query or question.

The query feature in NVivo8 makes the following possible:

- Searching a set of sources using the 'Find' function.

- Google type searches, on a selection of all sources and/or coding.

- Using the coding framework to retrieve relevant evidence - using 'coding query' and coding tree.

- Making connections between sources, coding and attributes to equal qualitative cross-tab - using 'matrix' function.

Once the team understood the capabilities and restrictions of the system and NVivo we needed to ensure that the system allowed us to undertake analyses, regularly and on an adhoc basis, in the following four ways.

Fast and effective searching in a complex evidence environment

The Research and Evaluation team are often asked to produce a quick briefing or snap shot on particular topic or issue. Colleagues want to know:

- What do we already know?

- What the current position is?

- What is all the evidence saying?

For these requests, time is the critical issue, as a response is required immediately or within days, but, at the same time a comprehensive response is called for; where Government, stakeholders, partners as well as QCDA's perceptions and policy are all considered.

The coding framework was developed from the policy and as a result it is often fairly easy to select the theme from the coding tree and analyse the information using attributes and the query features of NVivo to limit the evidence to the appropriate context. Example issue: Rising the participation age to 18.

Identification of emerging themes and issues, including areas where evidence is scarce/missing

As the coding framework was constructed using QCDA's strategic objectives, when the evidence is collated and coded, it becomes apparent where themes are emerging (eg Apprenticeships) or gaps (eg Pre-14 education impact on the reform) exist. Further detailed analysis establishes the exact themes and gaps in the data.

Development of evidence-informed policy during hectic period of early implementation/ evaluation

A key feature of the system is to ensure any strategic or policy decisions are based on evidence. So, current, complete and reliable evidence must be in the system, to ensure implementation issues are considered and flagged at the earliest opportunity. At the evaluation stage the secondary evidence held in REMS, combined with other primary and statistical data, ensures that evaluations are an accurate reflection of the programme areas. Example analysis: Functional Skills on hurdle and assessment see www.taggoram.co.uk/research/cases/REMS.

Single evidence base which can be probed by different stakeholders to provide tailored perspectives

The system was designed to include not only QCDA published evidence, but also relevant evidence produced by stakeholders and partners. As a result the evidence held in the system is of value to a range of stakeholders and partners and so they have been given the opportunity to use and query the database, for their work. Example queries:. Learning Skills Council request for information on delivery issues and internal impact of the recession on education and skills.

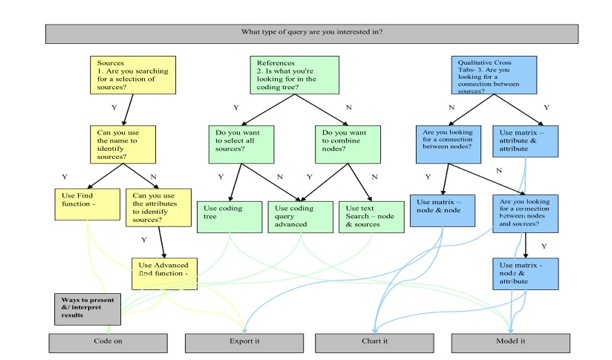

In order to assist users (both internal and external) to use the system the team have constructed an analysis process manual (see www.taggoram.co.uk/research/cases/REMS) which outlines the ways in which data can be retrieved and used. The diagram below shows the process map from the manual.

Back to Project Home Page |